CHAPTER NINE

Information is important for the functioning of markets. However, information cannot be acted upon without attention, and thus attention capture and information are essential to a functioning market economy, or indeed any competitive process.1 We also know that attention is a scarce resource that plays a significant part in data-driven marketing.

Information is contextual and dependant on the receiver’s frame of reference. When a trader states that ‘your privacy is important to us’, it is likely true, but the statement may have a very different meaning for the trader and consumer, respectively. As Frank Luntz emphasises in the subtitle of his book: ‘it’s not what you say, it’s what people hear’.2

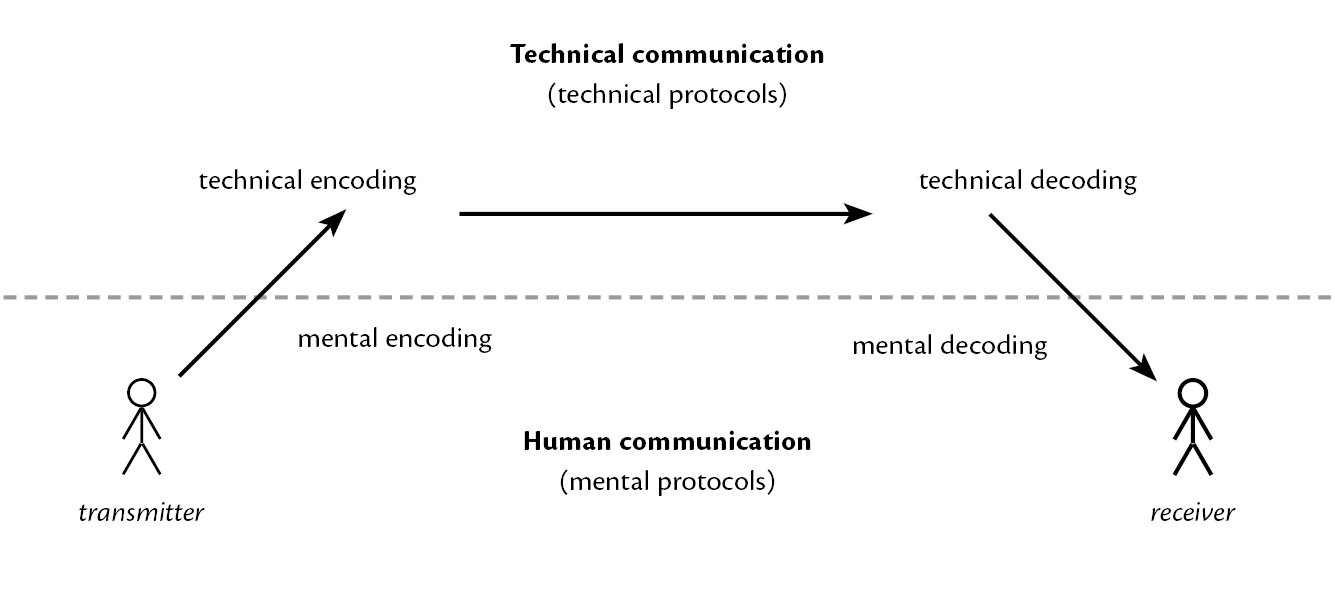

The internet is all about information, communication and technical infrastructure. Communication (derived from the Latin communicare, ‘to share’) between people requires a common language (a protocol), which is used to convey meaning between two or more parties. In 1948, Claude Shannon introduced a communication-model3 that consists of five parts, recognising possible sources of noise—which can be eliminated through digitisation and redundancy—between the transmitter and the receiver. Meaning can be ignored in the technical layer,4 as Shannon focuses on transmission of information.

Figure

9.1. An illustration of communication inspired by Claude Shannon and

Frank Luntz. In addition to technical coding, the message must be

encoded and decoded by means of a common language (protocol).

Non-verbal cues are important to human communication,5 and the trader’s mental protocol for encoding may be different from the mental protocol that the consumer uses for decoding. The Mehrabian ‘rule’ suggests that 7% of meaning is communicated through spoken word (verbal), 38% through tone of voice (vocal), and 55% through body language (facial).6

In this book, we use the term ‘information’ for what is being communicated, and ‘transparency’ for the situation in which the consumer understands the information. As the consumer is expected to be an active participant in the market, and, in order for the market to function properly, some effort (overcoming friction) on his part is required.

1. Transparency and empowerment

Transparency is a prerequisite for empowerment,7 and information requirements permeate both consumer protection law and data protection, as discussed in Chapter 4 (data protection law) and Chapter 5 (marketing law).8 Transparency is closely linked to the consumer’s right to self-determination, according to which consumers are supposed to take informed decisions in accordance with their individual goals, values and preferences.

As discussed in Chapter 6 (human decision-making), consumers (human beings) have limited resources with regard to time, cognition, attention, etc. The concepts of information overload9 and rational apathy (deliberate ignorance)10 suggest that there is a limit to what we can comprehend and that it is (often) an efficient strategy to ignore information.

It is better to opt for a ‘satisficing strategy’ (satisfy + suffice) rather than a ‘maximising strategy’, as the latter comes at a significant cost, including the time spent on optimising the structure of information search.11 As observed by Barry Schwartz, ‘satisficing is, in fact, the maximizing strategy’,12 i.e. choosing informed intuition over analytical reason as the most trustworthy decision-making tool.13 To seek legal counsel to decode commercial communication is seldom economically or practically viable for consumers.

Articles 13 and 14 GDPR contain comprehensive information requirements that apply when informing the data subject about the data controller’s identity and contact details, the purposes for and categories of personal data processed, and the data subject’s rights, as well as other information ‘necessary to ensure fair and transparent processing in respect of the data subject’. When the data controller relies on the balancing test as legitimate basis, the data subject must be informed about the legitimate interests pursued.

In the context of entering consumer contracts, the Consumer Rights Directive14 stipulates extensive information requirements concerning the product, trader, price, etc. This is further supported by Articles 6 and 7 UCPD, which (in commercial practices) prohibit misleading information and the omission of ‘material information that the average consumer needs, according to the context, in order to take an informed transactional decision’ (emphasis added). Even though information about the processing of personal data is not explicitly mentioned in the UCPD, failing to comply with the information requirements in the GDPR is likely also to constitute an unfair commercial practice.

Transparency is also important to ensure compliance with the purpose limitation principle in Article 5(1)(b) GDPR, which requires that personal data be collected only for specified, explicit and legitimate purposes. Similarly, consent must be both specific and informed. In addition, the request for consent must be presented in a manner that is clearly distinguishable from other matters, such as contractual terms.15 The purpose for collection must be ‘detailed enough to determine what kind of processing is and is not included within the specified purpose’, and vague or general purposes, such as ‘“improving users’ experience”, “marketing purposes”, “IT-security purposes” or “future research”—without further detail—will usually not meet the criteria for being “specific”’.16 In order to be ‘specific’, the controller must apply:17

purpose specification as a safeguard against function creep,

granularity in consent requests, and

clear separation of information related to obtaining consent for data processing activities from information about other matters.

Article 12(1) GDPR provides that information must be (a) in writing (including by electronic means) and (b) provided in a ‘concise, transparent, intelligible and easily accessible form, using clear and plain language’. Similarly, the Consumer Rights Directive requires that information be provided in a clear and comprehensible manner.

1.1. The information paradigm

Under EU law, consumers are to a large extent expected to read and understand relevant information, and to ‘be aware of the purpose and effects of advertising and sales promotions’.18 This is known as the ‘information paradigm’, which permeates EU consumer policies.19 This is beautifully embraced by Advocate General Fennelly of the CJEU:

‘Community law […] has preferred to emphasise the desirability of disseminating information, whether by advertising, labelling or otherwise, as the best means of promoting free trade in openly competitive markets. The presumption is that consumers will inform themselves about the quality and price of products and will make intelligent choices.’20

The information paradigm relies primarily on two assumptions: (1) information is recognised as the least intrusive (and thus, the preferred) form of market intervention, and (2) consumers are able to read, understand and consider available information so as to make informed (‘rational’) decisions. In its interpretation of item 11 on the UCPD’s blacklist, concerning advertorials, the CJEU has emphasised that the purpose of the provision is to ensure (a) indication of commercial influence and (b) that the indication is ‘understood as such by the consumer’.21

Thus, there is a long-standing tradition of expecting consumers to read information, assuming, for instance, in the context of foodstuffs that consumers—whose purchasing decisions depend on the composition of the products in question—will read the mandatory list of ingredients first.22 This, however, does not preclude the possibility that other elements of the marketing of a product may be misleading23 or aggressive. For data-driven business models, this could translate into the assumption (in consumer protection law) that at least those consumers who care about privacy will read (and understand) the relevant information provided by the trader.

Consumers may choose to ignore information and thus bear the risk; a risk that to some extent is mitigated by the consumer’s right of withdrawal,24 the trader’s liability for ‘lack of conformity’25 and the non-binding nature of contract terms that are unfair.26 For consumer rights to be effective, consumers must be aware of and ready to pursue their rights. In some situations, sunk costs and network effects may increase the consumer’s switching costs to an extent that, for instance, the termination of a contract is not ideal, including deleting your profile on a social media platform.

In the Planet49 case, the CJEU noted that ‘it is not inconceivable that a user would not have read the information accompanying the preselected checkbox, or even would not have noticed that checkbox […].’27 This does not mean that information accompanying (unchecked) checkboxes is assumed to be read per se. As in consumer law, this would be a practical solution, however badly aligned with reality. This is corroborated by the fact that under data protection law—and in contrast to consumer protection law—the trader (data controller) is accountable for ensuring both the transparency and the legitimacy of processing.

In both consumer protection law28 and data protection law,29 it seems sufficient that the consumer has been able to read information in order to make an informed decision, probably assuming that the decision not to read information is a sufficiently informed decision in itself. However, it is required that the trader exercise sufficient care—in both substance and form—to convey mandated information. This may include a consideration of ‘the words and depictions used as well as the location, size, colour, font, language, syntax and punctuation of […] various elements’.30

By using framing effects, privacy infringement may be framed as a benefit or in some other way appear benign.31 ‘Tracking’ and ‘improving the service’ sound more appealing than ‘surveillance’ and ‘behaviour modification’; and ‘cookies’ may appear deliciously benign . . . especially when seasoned with a picture of chocolate chip cookies. Similarly, ‘smart’ is a powerful, and widely accepted, framing of the processing of personal data, including by means of surveillance.

When balancing the obligations (‘due care’) expected from the trader and the consumer, respectively, the question being raised is, in essence, who should bear the risk of the consumer’s not making efficient choices, i.e. choices that match the consumer’s goals, values and preferences. This could occur, for example, if the consumer fails to read or understand information provided by the trader.

1.2. Clear and comprehensible information

The idea that information does not necessarily equal transparency (or awareness) is especially true in situations where the disclosure of information merely serves as a disclaimer, users are overwhelmed with decisions,32 and/or the information is not conveyed in a language that the user is likely to understand.33

For the use of cookies, consent must be obtained after providing the user with ‘clear and comprehensive information, in accordance with [the GDPR], inter alia, about the purposes of the processing’.34 According to recital 66 of the ePrivacy Directive, ‘the methods of providing information and offering the right to refuse should be as user-friendly as possible’. This emphasises that providing information is a function of both ‘what’ and ‘how’, similar to the distinction between misleading and aggressive practices in the UCPD.

The care that the trader must show must be directly proportional to the magnitude of the given implications, and must be assessed in light of the (average) user’s reasonable expectations. Given that privacy is a fundamental right, it must be assumed that privacy interference weighs more heavily than economic distortion in this equation.

If we accept that contemporary marketing is largely about signalling an image or a lifestyle and that consumers, generally speaking, are too busy to read (much) information, it may be difficult to maintain belief in the information paradigm as we know it. This is especially likely if we consider that in many situations it may be an efficient strategy for consumers to ignore information (rational apathy or deliberate ignorance), and sometimes biases and heuristics are a very efficient strategy for human decision-making—as long as they are not exploited by e.g. traders. Or to quote Daniel Kahneman:

‘a particularly unrealistic assumption of the rational-agent model is that agents make their choices in a comprehensively inclusive context, which incorporates all the relevant details of the present situation, as well as expectations about all future opportunities and risks.’35

2. Information asymmetries (three tiers)

In a market economy, consumers are used to making decisions with asymmetric information vis-à-vis the trader (the commercial entity). Consumer protection laws aim, inter alia, to reduce this information asymmetry so as to allow consumer to take informed decisions in accordance with their goals, values and preferences.

In general, consumers are considered to be in a weaker position vis-à-vis traders in a commercial transaction between such two parties, as discussed in Chapter 3 (regulating markets). Information is power, and information asymmetry is important to the rationale for protecting consumers.36 In this context, the relevant powers are those that relate to markets where traders are offering competing products and where consumers are expected to make rational decisions to enable the markets to work properly. Power is not necessarily measured in the trader’s market share and may also comprise powers that can be exercised over individual consumers, including by the amount of collected data (personal or not) and access to Artificial Intelligence.37 This is one reason why data protection law and consumer protection law are important supplements to competition law in the regulation of data-driven business models.

As early as the mid-1970s, the roadmap for an EU consumer policy noted that there is an ‘[…] increased abundance and complexity of goods and services afforded the consumer by an ever-widening market’ and that ‘although such a market offers certain advantages, the consumer, in availing himself of the market, is no longer able properly to fulfil the role of a balancing factor [… as] producers and distributors often have a greater opportunity to determine market conditions than the consumer’.38

In order to discuss data-driven business marketing from a consumer protection perspective, it may be helpful to focus on three tiers of information asymmetry which relate to (1) the offer, (2) human behaviour and (3) the user.

2.1. Tier 1. Seller, product and terms

Traditionally, the term ‘information asymmetry’ has been used to describe the fact that the seller (normally) has more information about himself and his products than the prospective buyer (the consumer) has.39 This imbalance has been addressed by setting information requirements in the context of, for instance, marketing,40 contracting41 and the processing of personal data.42 Legislation prohibits misleading information and mandates the disclosure of certain information. Failure to comply with these provisions may influence whether the performance of a contractual obligation is considered to be in conformity with the contract.43

From a contractual point of view, the trader has—at least in theory—an interest in drafting contract terms in a plain, intelligible language to avoid running afoul of the rule stipulating that unclear terms must be interpreted in the way that is most favourable to the consumer (the so-called rule of ambiguity, in dubio contra stipulatorem).44 In particular in more complex business models, the trader has a very good understanding of revenue streams, e.g. in long-term contractual relationships.

It may be an unfair commercial practice to omit ‘material information that the average consumer needs, according to the context, to take an informed transactional decision’,45 but there is usually no obligation to explain the business model itself. Thus, the user has to infer meaning from the individual contract terms. This is the case, for example, where business models rely on subscription or subsequent selling of replacement items (e.g. ink cartridges for printers).46

Experience as well as information from mainstream media and other sources may help the consumer learn about products, traders, business models, market conditions etc. However, there is friction relating to obtaining this, and the trader’s information is usually more readily available.

2.2. Tier 2. Human decision-making and persuasive technology

As discussed in Chapter 6 (human decision-making) and Chapter 7 (persuasive technology), traders have a good understanding of how to influence behaviour, i.e. persuade, deceive, coerce and manipulate. This interest has grown significantly in step with the rise of mass markets and mass marketing. The idea of scientific marketing has been around for roughly a century.47

Information on human decision-making is gathered, inter alia, by applying psychological insights, observing human behaviour and studying the brain.48 These insights may be boiled down to the concept of ‘bounded rationality’,49 which includes ‘bounded willpower’.50 Add to this the knowledge of the inner workings of persuasive technology.

The marketing industry relies on experience through trial and error, and may buy access to this knowledge. This knowledge may e.g. stem from experience, advertising agencies, academic literature and/or market research. Even without consulting literature on marketing or human-computer interaction, the trader will usually have more insights into human behaviour modification than the consumer. This knowledge gives the trader a position of power that can be applied in marketing before, during and after commercial transactions, including, in particular, in the design of the user experience.51

The CJEU has recognised that the objective of achieving a high level of consumer protection in the UCPD is ‘based on the assumption that, in relation to a trader, the consumer is in a weaker position, particularly with regard to the level of information’ and pointing out that ‘it cannot be denied that there is a major imbalance of information and expertise between those parties’.52

In contrast to information about the offer, the trader is not likely to disclose how behavioural insights are applied in marketing and/or the design of the user interface, and consumers are not likely to realise the sophistication behind persuasive technology. This asymmetry means that the trader knows more about how the average consumer is likely to react to commercial practices than the consumer himself. Even if the trader were to disclose how behavioural insights are used to design marketing and the user experience, it might well be difficult for the user to comprehend the actual, individual implications, unless the explanations were so blunt that it would scare off most of the users that would read it.

Behavioural sciences, including persuasive design, are complex concepts, and even when mainstream media occasionally expose aggressive business practices, education of the public at large may be difficult, especially when it comes to less sophisticated consumers who may be more vulnerable to the practices in question and less likely to educate themselves through mainstream media.

2.3. Tier 3. The user (personal data)

The third tier of information asymmetry concerns knowledge about individual human beings (personal data). Coupled with detailed knowledge about the behaviour of many other people, this information is valuable for predicting the behaviour of individuals, including how best to guide their behaviour in a desired direction by creating a ‘persuasion profile’.53

What is of particular importance to this tier of information asymmetry is that every user is treated differently. This is done on the basis of personal traits that are deduced from revealed personal data and/or inferred from behaviour, including contextual information (e.g. time and place). This tier has gained significant importance in step with the growth in data points and capabilities within Artificial Intelligence.

The complexity of using information about the human may range from adapting direct mail to particular segments to the use of black-box algorithms,54 where artificial intelligence is used to determine the most profitable communication strategies. In the latter example, it may only be possible to explain the logic in general terms, and not why certain individuals are exposed to specific information.55

Even though the trader must inform the data subject about the purpose for processing, the data subject may have to infer the consequences himself. Especially when personal data are shared across platforms and among traders, it may be very difficult to comprehend the actual implications. This is especially true if storytelling is used to create the impression that the customisation is primarily done to help the user; e.g., to avoid wasting time on irrelevant marketing.

2.4. Their relative importance

All three tiers of information asymmetry have always played a part in marketing, but with digital technologies, their relative importance has shifted tremendously. In particular, Tiers 2 and 3 have become automated and scalable, and fast enough to adapt in real-time. Behavioural tracking capabilities have also added to the sophistication of Tiers 2 and 3.

Personalisation can be used to customise the user experience, marketing, products, prices, contract terms, etc. What is particularly important about Tier 3 is that every user is treated differently, with the consequent risk that they will lose their sense of direction.56 This is similar to the general loss of direction created by the careful design of shopping malls;57 but in data-driven business models, the shopping mall can be designed for each individual. Access to Artificial Intelligence coupled with behavioural sciences and personal data means that the trader is very likely to know far more about how individual consumers are likely to behave and can be persuaded than the consumers know themselves.58 Tier 1, by comparison, has been largely irrelevant for at least half a century59—beyond compliance with mandatory information requirements in consumer law.

Tiers: |

The offer |

Human behaviour |

The user |

Information about |

Seller, product and terms |

Human decision-making (behavioural sciences, including psychology) |

Individual and aggregate attributes and behaviours (personal and non-personal data) |

Can be used for |

Informing the consumer |

Influencing preferences and behaviour (marketing, including persuasive design) |

Tailoring products and marketing to individuals or segments, including contextual tailoring |

Obtained from |

Producing and/or selling products |

Research, literature, conferences, advertising industry etc. |

Data analysis, in-house, outsourced or from platforms (using data ‘on behalf of’) |

How the user learns |

Information from the trader, reviews, mainstream media etc. |

Experience and possible information from mainstream media |

Privacy policies (traders and platforms etc.) and possible information from mainstream media |

Level of complexity |

Low to medium (depending on product and business model) |

High (when it comes to understanding its real impact) |

From low (e.g. newsletter) to very high (e.g. AI and data aggregated from many sources) |

Figure 9.1. A Taxonomy of information asymmetries divided into 3 tiers.

2.5. Levelling asymmetries

From an economic perspective, disclosure of information is an inexpensive way of levelling information asymmetries, at least for the first tier of information asymmetry. For the second and third tiers of information asymmetry, it may be relatively easy to convey information about what the trader does, but the real implications for the consumer may be much more difficult to tease out.

It may, e.g., be straightforward to explain that personal data are collected—and possibly shared with partners—for marketing purposes and that the user experience is adjusted to ‘personal preferences’, but it may not be clear what information will be filtered out and how the personalisation is likely to affect the individual consumer’s decision-making (‘economic interests’) and privacy.

Disclosure of the strategies and tactics behind behavioural design—including friction, prompts and the decision to include or exclude particular information—may help to level out this asymmetry, especially if it includes information about how human decision-making is (likely to be) affected. This is, however, not commonly done, and to meaningfully explain the use of behavioural insights in persuasive technology and marketing may be difficult if the trader wants to appear trustworthy. Understanding its impact requires significant effort—including self-insight—from the consumer, who may be biased by overconfidence,60 including the illusion of invulnerability to persuasion.61

Applying behavioural insights to choice architecture may be akin to ‘misdirection’ and the ‘illusion of a free choice’ that are central elements in the performing of magic.62 When one enters a magic show such manipulation is expected, but when a consumer is looking to inform himself prior to taking an economic decision, he does not necessarily expect similar sophistication to be applied. As observed by Douglas Rushkoff,

‘[an] internet run by commercial interests means more than just customized banner ads and spam. It is a world more contained and controllable than a theme park, where the techniques of influence can be embedded in every frame and button.’63

Traders, in particular, have three advantages: (1) they design the experience (persuasion architecture) and the contract terms, etc. and (2) due to economies of scale, they—relative to consumers—can better justify spending resources on learning about persuasive design, whereas (3) the consumers—who must read and understand multiple traders’ terms and other information—on average, are not likely to (fully) comprehend the underlying purposes, techniques and complexities.

It should be obvious that the information paradigm has its limitations and that there is a need to explore other means for levelling information asymmetries to empower users.64 Real empowerment, which supports the consumer’s right to self-determination, must embrace bounded rationality and take into account the sophistication behind persuasive technology. We must understand the users’ condition as well as the environments in which they act, or as expressed by Richard Craswell:

‘In short, it is hard to justify either moral or economic objections to requiring advertisers to take their customers as they find them.’65

In recital (20a) of the proposal for an ePrivacy Regulation, the ‘end-user’s self-determination’ is explicitly mentioned, and it is noted that ‘[…] end-users may be overloaded with requests to provide consent [which] can lead to a situation where consent request information is no longer read and the protection offered by consent is undermined. The recital encourages providers of software to include settings in their software which ‘allows end-users, in a user friendly and transparent manner, to manage consent’.

3. Let’s be honest

As mentioned in Chapter 6 (human decision-making), successful traders seek to create partnerships with their consumers,66 and as in real friendships, traders could aspire to be honest in their communication. Empathy, as in understanding and caring for the consumers’ conditions, is important for honesty. Honesty entails an obligation to be transparent and refrain from manipulating, including by exploiting insights into human decision-making and persuasive technology. This means to be respectful of the consumer’s goals, values and preferences, as discussed in the previous chapter in the context of nudges and defaults.

The UCPD’s provision on misleading omissions could be interpreted as an honesty requirement, as it requires that ‘material information’ not be provided in ‘an unclear, unintelligible, ambiguous or untimely manner’. The requirement of ‘professional diligence’ explicitly must be ‘commensurate with honest market practice and/or the general principle of good faith’. Inspiration may also be drawn from ICC’s Advertising and Marketing Communications Code (2018):

‘Article 1.

All marketing communications should be legal, decent, honest and truthful.

[…]

Article 4.

Marketing communications should be so framed as not to abuse the trust of consumers or exploit their lack of experience or knowledge.

Relevant factors likely to affect consumers’ decisions should be communicated in such a way and at such a time that consumers can take them into account.’

Honesty could, for instance, be an argument against traders using friends’ names and pictures for marketing, as in ‘[your friend] just bought/liked this product/company’.67 Even though the statement is truthful, the practice utilises Cialdini’s liking principle, which also works in persuasive technology.68 The practice may be used both to increase engagement and to serve the interests of third party advertisers, and it may be difficult to create honest transparency with regard to the psychological effect of this commercial practice. This also makes it difficult to obtain (transparent) consent.

From the consumer’s friend’s perspective, such usage of his image for commercial purposes infringes on his privacy, including the protection of personal data, which may be contrary to the legitimacy and proportionality principles of the GDPR, as discussed in Chapter 4 (data protection law). The information is taken from one context and used in a very different (unforeseeable) context.

In addition to cookies, there are tracking techniques that are even more covert. Spy pixels are hyperlinks to remote image files in HTML email messages that allow the trader to see when the recipient has opened the email after the image is (often automatically) downloaded. Fingerprinters allow for identification across websites based on unique features of your computer that are accessible through the browser, including operating system and installed fonts.69 The use of these techniques is not foreseeable for the average user, which suggests that consumers should be provided with information about them. Because of their similarities with cookies, consent is most likely needed as well.

3.1. Levels of transparency

Information only creates transparency when it is comprehended by the recipient, and to be practical, we must also assume transparency when the consumer has received honest information in a way that engages or appeals to his capacity for reflection and deliberation. We could call it ‘translucency’, i.e. when the consumer is aware of ignoring information, which meaning he can reasonable foresee.70

The care that can be expected from the trader must increase in step with (a) the consequences for the consumer and (b) the complexity involved. Consequences may relate to the impact on both privacy and economic interests. Contextual advertising and chronological newsfeeds are, for instance, less complex and more intuitive than information personalised by algorithms. Levels of transparency could include the consumer’s recognition of:

the existence of behaviour modification,

the commercial intent,

the nature of the commercial practices in play,

how practices are likely to affect the consumer’s behaviour,

what content is personalised,

what information is filtered out,

the consequences, including for privacy and economic interests,

possible risks, such as emotions modification, polarisation and addictions.

Consumers are expected to be aware of the purpose and effects of advertising and sales promotions,71 and to know that they may also be influenced by emotions which play on the feeling of freedom and independence.72 Consumers may be aware of the purpose of commercial practices, which is notably not the same as understanding how it influences their behaviour. Professional diligence must be understood also in the light of the legitimate expectations of the average consumer.73

Articles 7(1) and 7(2) UCPD require that the commercial intent must be apparent from the context and that the user must be provided with ‘material information that the average consumer needs, according to the context, to take an informed transactional decision’. This could, in line with the GDPR, include information about what data are being used in the actual context as well as how, when and why these data are being used (possible consequences and logic involved).

For editorial media, there is a requirement of separation between editorial content and commercial communication.74 This principle of separation could be used as an argument—subject to proportionality, considering the potential infringement on privacy and agency—for (a) separating data between the primary and ancillary product and (b) making it distinctively clear when (1) surveillance is taking place and (2) content is personalised, e.g. by optical and/or acoustic and/or spatial means. Also in this context, pervasive guidance on how to withdraw consent must be considered ‘material information’.

3.2. Honest framing

Concepts like privacy and economic interests may be abstract, and thus difficult for consumers to comprehend. Empathic disclosure of information must ensure that the trader considers the consumer’s reasonable expectations, as implied in the definition of the average consumer in the UCPD and explicitly used in the GDPR.

Storytelling is a powerful tool that can be used for both manipulation and transparency, and traders have the means to pre-test information. To be honest, the trader must be careful to use truthful and coherent frames that may be helpful for consumers to take decisions in accordance with their goals, values and preferences. As expressed by Barry Schwartz and Kenneth Sharpe:

‘Thus our task when we make decisions is not to avoid being influenced by frames, but to choose the right frames—frames that help us to evaluate all that is relevant. And judging what is the right frame will depend on the purposes of our evaluation and impending decision.’75

Honest framing must also entail transparency with regard to the trader’s interests, strategies and tactics. For example, it seems as though Facebook is doing us a favour when they state that:

‘We use your personal data, such as information about your activity and interests, to show you ads that are more relevant to you.’76

Of course we, in general, want information to be relevant. But do we want relevant advertising? Unless users visit Facebook with the aim of watching targeted advertising, the cost of using this ‘free’ service is directly proportionate to the relevance of the displayed advertising, assuming that the efficiency of behaviour modification increases with relevance.

It is often claimed by traders that they ‘do not sell your data’, which sounds comforting. Due to the availability heuristic, the consumer may be distracted from considering the fact that data are likely rented out for commercial purposes, and maybe even be shared with (not sold to) third parties.

As mentioned in Chapter 6 (human decision-making), there are slight indications of the CJEU moving from the information paradigm towards a communication paradigm in the context of the UCPD.77 However, under the GDPR, we may require something closer to a transparency paradigm, which includes translucency.

3.3. Explainability

As mentioned in Chapter 2 (data-driven business models), a feature of AI is that it can identify correlations beyond what human beings, including the designers of the AI, can understand. AI is also per design unpredictable, yet efficient in achieving the goals it is programmed to pursue. The use of an average user as a benchmark requires that the courts balance the trader’s obligation to provide clear and comprehensible information against the consumer’s obligation to devote attention and cognition to comprehending the information.

As an example of proportionality under the GDPR, transparency in the context of ‘automated decision-making, including profiling’ (Article 22 GDPR), entails providing ‘meaningful information about the logic involved’, as well as information about ‘the significance and the envisaged consequences of such processing for the data subject’.78 This obligation could serve as inspiration for ensuring overall transparency in the context of data-driven business models.

Similarly, in the CJEU’s decision in the Planet49 case, it is mentioned that ‘clear and comprehensive information’ implies that a user is ‘in a position to be able to determine easily the consequences of any consent’ and that the information must be ‘sufficiently detailed so as to enable the user to comprehend the functioning of the cookies employed’.79

As mentioned in Chapter 5 (marketing law), the New Consumer Deal Directive added transparency requirements in the context of ranking, which entails disclosure of ‘parameters determining the ranking of products’ and ‘the relative importance of those parameters, as opposed to other parameters’. It is recognised that ‘ranking or any prominent placement […] has an important impact on consumers’ (recital 18). Although the scope is limited, inspiration can be drawn for other uses of AI.

The proposed Digital Services Act suggests a requirement to provide ‘meaningful information about the main parameters used to determine the recipient to whom the advertisement is displayed’. For ‘very large online platforms’, it is emphasised that the provider must ensure (a) that the recipients understand how information is prioritised for them and (b) the recipients enjoy alternative options that are not based on profiling.80 Similar transparency requirements are found in the Platform to Business Regulation.81 The obligations pertaining to the main parameters determining the ranking are without prejudice to the Trade Secrets Directive.82

It goes beyond the scope of this book to dive into the details of ‘Explainable AI’. The first point here is to note that there are somewhat elaborate standards for creating transparency in the context of personalisation, which may be used as inspiration in the interpretations of the UCPD and the GDPR. The provisions may serve as inspiration for the explainability of commercial practices beyond the use of AI, recognising that ‘meaningful information’ is a vague term.

A second point is to consider the legitimacy and proportionality of using Artificial Intelligence for behaviour modification in a commercial context. This assessment could include whether the personalisation (1) is difficult or impossible to explain, (2) is more complicated than necessary (complexity by design) and (3) primarily serves the goals of the trader.

A tougher explainability test could be that transparency requires that the (average) user should be able to explain what is going on. Try and ask a friend ‘who pays for Facebook?’ The conversation is likely to go like this:

Friend: ‘we do.’

You: ‘how?’

Friend: ‘with our data, silly!’

You: ‘but Mark Zuckerberg can’t buy bread with data?’

Friend: ‘well, OK, advertisers pay Facebook.’

You: ‘but why would they pay Facebook?’

Friend: ‘because people buy the advertised products, of course.’

You: ‘could those people include you?’

Friend: ‘hmm maybe’ or ‘no, I am not influenced by advertising.’

You: ‘is it fair to say that we pay with real money?’

Friend: ‘well, yes, I guess.’

You: ‘so what is your opinion on personalised advertising?’

Friend: ‘it’s great! I don’t have to waste time on irrelevant advertising.’

You: ‘hmm . . .’

4. Permission: consent or desist

Both the GDPR and the UCPD rely on the consumer as an active participant, including by his reading and understanding information in order to pursue his goals, values and preferences. However, there must be a threshold for what can reasonably be expected by the average user in terms of devoted time and cognition, i.e. situations where transparency is not possible to establish, and where, as a consequence, informed consent cannot be given. It could also be considered that after a certain point, freedom actually decreases with options, which may be an argument for prohibiting certain data-driven marketing practices. As discussed in the previous chapter, a solution is to ensure that defaults are designed to preserve consumers’ goals, values and preferences. As expressed by Helen Nissenbaum:

‘Proposals to improve and fortify notice-and-consent, such as clearer privacy policies and fairer information practices, will not overcome a fundamental flaw in the model, namely, its assumption that individuals can understand all facts relevant to true choice at the moment of pair-wise contracting between individuals and data gatherers.’83

What is permissible data-driven marketing must depend on (a) privacy impact, (b) complexity and (c) the reasonable expectations of the average user weighed against measures to (1) establish transparency, including translucency, (2) ensure that consent unambiguously reflects the consumer’s wishes, including (3) by careful consideration of (i) human decision-making and (ii) persuasive technology. Due to the requirements of legitimacy and proportionality in data protection law, it must also be considered to what extent the processing of personal data is necessary for the purposes of behaviour modification.

Data-driven business models often rely on subscription which entails a time-span—which may be years—between contracting/consenting and the actual performance of the contract. It can therefore be suggested that the user occasionally should be reminded of his consent to ensure that it reflects the user's preferences.84

5. ‘Free’ as in . . .

Data-driven business models often rely on offering a product free of charge (‘free as in beer’) and securing funding from other streams of revenue based on attention and/or data (‘value extraction’). So it may be more ‘free as in “free beer” with undeniable expectations or privileges’.

Besides being an attractive price point, ‘free’ is also the mother of all misleading frames in the context of data-driven business models. It relies heavily on Cialdini’s reciprocity and liking principles, and it removes friction. How can a company that offers us free products be bad? As expressed by Tim Wu:

‘Thanks to the attention merchant’s proprietary alchemy, advertising would make Google feel “free” to its users as if it were just doing them a big favor.’85

It may not be as difficult to identify the challenges as it is to accept the somewhat counter-intuitive frame that ‘free’ can be extraordinarily expensive, cf. the dialogue above.86 The use of the term ‘free’ has been characterised as an emotional hot button which makes us forget the downside of, e.g., eating ‘free chocolate’ (non-economical cost).87 Humans are afraid of loss (downgrading), and we tend to overvalue things we have. This observation has been used to explain the effectiveness of ‘free’ trial periods.88

As discussed in Chapter 5 (marketing law), it is prohibited per se to describe a product as ‘“gratis”, “free”, “without charge” or similar if the consumer has to pay anything other than the unavoidable cost of responding to the commercial practice and of collecting or paying for the delivery of the item’.89 It seems reasonable to argue that the provision applies only to payments of monetary value, as that could reflect the intuitive understanding of ‘free’ by the average consumer. However, the CJEU has emphasised in the context of advertorials (Item 11) that such a narrow understanding of payment (from a trader) does ‘not reflect the reality of […] advertising practice and would in large measure deprive that provision of its effectiveness’.90

It may be argued that the prohibition of certain uses of the term ‘free’ could similarly be deprived of its effectiveness by a narrow interpretation of ‘payment’. And even without such broad interpretation, it could be contrary to the general prohibitions against unfair and misleading commercial practices to advertise a service as free, while requiring the processing of personal data that has ‘asset value’ and are not strictly necessary for delivering the service.

The CJEU has emphasised that information about terms for and consequences of concluding a contract is of fundamental importance for a consumer, and that ‘since the price is, in principle, a determining factor in the consumer’s mind when it must make a transactional decision, it must be considered information necessary to enable the consumer to make such a fully informed decision’.91

The privacy implications when signing a contract must at least be in the same order of importance. Thus, there is good reason to argue that it is misleading to describe a product as ‘free’ when the processing of personal data goes beyond what is strictly necessary for delivering the primary product. In the Commission Staff Working Document,92 it is noted that there is an increasing awareness of the ‘de facto’ economic value of personal data being ‘sold to’ (used on behalf of) third parties and that failure to inform consumers that their personal data will be processed for economic purposes could amount to omitting ‘material information’.

Even though the New Deal for Consumers Directive recognises that ‘digital content and digital services are often supplied online under contracts under which the consumer does not pay a price but provides personal data to the trader’,93 the widening of the scope of the Consumer Rights Directive is not to justify such practices, but to ensure that consumers still enjoy the protection offered by that directive. In the UCPD it is stated that price indications may not be misleading.94 In addition, it follows from Article 5(2) of the E-Commerce Directive that price references must to be indicated clearly and unambiguously.

5.1. Paying with personal data

The processing of personal data for marketing purposes beyond what is strictly necessary for delivering a primary product has ‘asset value’, and it may thus seem fair to argue that ‘paying with personal data’ is more truthful than saying the service is ‘free’. The framing is compelling and convincing, but for the consumer, the value of personal data is quite abstract, and the same data can be given to different traders without experiencing friction. The consumer may not feel the friction of paying or the cost of being manipulated.

In many cases, we pay with attention and agency, which are both scarcer and more precious than personal data, and—as we discuss in the following chapter—are important in both personal, social and societal contexts. In contrast to paying with data, there is an opportunity cost to paying with attention and agency. In terms of both transparency and translucency, it must be ensured that the user understands the technology and logic involved in data-driven marketing, as well as its significance and the envisaged consequences. This must, in particular, include applied means of persuasive technology to increase engagement.

As we discuss in Chapter 10 (human dignity and democracy), it is behaviour modification that the advertisers are paying for. If the techniques used for this purpose, including surveillance and manipulation, go beyond the reasonable expectations of the average user, there are good arguments for saying that ‘paying with personal data’ is misleading, and possible contrary to the requirement of lawful processing of personal data.

1. Tim Wu, The Attention Merchants (Alfred A. Knopf 2017), p. 77.

2. Frank Luntz, Words That Work (Hyperion 2007). See also George Lakoff, [The All New] Don’t Think of an Elephant (Chelsea Green Publishing 2014, first published 2004).

3. Claude E. Shannon & Warren Weaver, The Mathematical Theory of Communication (University of Illinois Press 1949), in particular Introduction and Figure 1. See also Jimmy Soni & Rob Goodman, A Mind at Play (Simon & Schuster 2017).

4. Jimmy Soni & Rob Goodman, A Mind at Play (Simon & Schuster 2017), p. 139.

5. See also Shmuel Becher & Yuval Feldman, ‘Manipulating, Fast and Slow: The Law of Non-Verbal Market Manipulations’, Cardozo Law Review, 2016, pp. 459–507.

6. Albert Mehrabian & Morton Wiener, ‘Decoding of Inconsistent Communications’, Journal of Personality and Social Psychology, 1967, pp. 109–114; and Albert Mehrabian & Susan R. Ferris, ‘Inference of Attitudes from Nonverbal Communication in Two Channels’, Journal of Consulting Psychology, 1967, pp. 248–252.

7. See, e.g., Case C‑54/17, Wind Tre, ECLI:EU:C:2018:710, paragraph 45: A ‘free choice’ supposes, in particular, that information provided is ‘clear and adequate’.

8. See also Article 29 Working Party ‘Guidelines on transparency under Regulation 2016/679’, WP260 rev.01, 11 April 2018, and <https://www.datatilsynet.no/en/news/2021/intent-to-issue--25-million-fine-to-disqus-inc/>.

9. See e.g. Jacob Jacoby, ‘Perspectives on Information Overload’, 10 Journal of Consumer Research, 1984, pp. 432–435.

10. Developed in the context of voter ignorance; see Anthony Downs, An Economic Theory of Democracy (Harper and Row 1957).

11. Herbert A. Simon, ‘Rational Choice and the Structure of the Environment’, Psychological Review, 1956.63, pp. 129–138. See also Jon Elster, Sour Grapes (Cambridge University Press 2016, first published 1983), pp. 17–18 and 93.

12. Barry Schwartz, The Paradox of Choice (Ecco 2016, first published 2004), p. 81.

13. Geoffrey A. Moore, Crossing the chasm (3rd edition, Harper Business 2014), p. 108.

14. Directive 2011/83/EU of 25 October 2011 on consumer rights.

15. Article 7(2) GDPR.

16. Article 29 Working Party, ‘Opinion 03/2013 on purpose limitation (WP203)’, pp. 15–16.

17. EDPB, ‘Guidelines 05/2020 on consent under Regulation 2016/679 (version 1.1)’, paragraphs 55 et seq.

18. Advocate General Trstenjak’s opinion in Case C‑540/08, Mediaprint Zeitungs- und Zeitschriftenverlag, ECLI:EU:C:2010:161, paragraph 104.

19. See, e.g., Norbert Reich, Hans-W. Micklitz, Peter Rott & Klaus Tonner, European Consumer Law (2nd edition, Intersentia 2014), pp. 21–22.

20. Advocate General Fennelly’s opinion in Case C‑220/98, Estée Lauder, ECLI:EU:C:1999:425, paragraph 25.

21. Case C‑371/20, Peek & Cloppenburg, ECLI:EU:C:2021:674, paragraph 41. Emphasis added.

22. Case C‑195/14, Teekanne, ECLI:EU:C:2015:361, paragraph 37 with references.

23. Case C‑195/14, Teekanne, ECLI:EU:C:2015:361, paragraph 38.

24. See Directive 2011/83/EU of 25 October 2011 on consumer rights.

25. See Directive 1999/44/EC of 25 May 1999 on certain aspects of the sale of consumer goods and associated guarantees.

26. Directive 93/13/EEC of 5 April 1993 on unfair terms in consumer contracts.

27. Paragraphs 55 (emphasis added).

28. See e.g. Case C‑628/17, Orange Polska, ECLI:EU:C:2019:480.

29. See e.g. Case C‑673/17, Planet49, ECLI:EU:C:2019:801.

30. Case C‑195/14, Teekanne, ECLI:EU:C:2015:361, paragraph 43. See also Shmuel Becher & Yuval Feldman, ‘Manipulating, Fast and Slow: The Law of Non-Verbal Market Manipulations’, Cardozo Law Review, 2016, pp. 459–507.

31. See also George Lakoff, [The All New] Don’t Think of an Elephant (Chelsea Green Publishing 2014, first published 2004).

32. For decision fatigue see Barry Schwartz, The Paradox of Choice (Ecco 2016, first published 2004).

33. Uri Benoliel & Shmuel Becher, ‘The Duty to Read the Unreadable’, Boston College Law Review, 2019, pp. 2255–2296. Applying linguistic readability tests to sign-in wrap agreements for five hundred websites indicated that ‘the average readability level of these agreements is comparable to the usual score of articles in academic journals’.

34. Article 5(3) of Directive 2002/58 as amended by Directive 2009/136/EC.

35. Daniel Kahneman, ‘Maps of Bounded Rationality: Psychology for Behavioural Economics’, 93 The American Economic Review, 2003, pp. 1449–1475, pp. 1469 and 1459. See also Richard A. Posner, ‘Rational Choice, Behavioral Economics, and the Law’, 50 Stanford Law Review, 1997, pp. 1551–1575, p. 1559; and Daniel Kahneman, Thinking, Fast and Slow (Farrar, Straus and Giroux 2011), chapter 35.

36. See e.g. Proposal for a council directive on unfair terms in consumer contracts, 28 September 1990, COM 90(322) final, Official Journal, C 243, pp. 2–5, recital 8: ‘Purchasers of goods and services should be protected against the abuse of power by the seller, in particular against one-sided standard contracts and the unfair exclusion of essential rights in contracts.’

37. See also Joseph Turow, The Daily You—How the New Advertising Industry Is Defining Your Identity and Your Worth (Yale University Press 2011).

38. Council Resolution of 14 April 1975 on a preliminary programme of the European Economic Community for a consumer protection and information policy and Preliminary programme of the European Economic Community for a consumer protection and information policy, 25 April 1975, Official Journal, C 92, Annex, paragraph 6.

39. See, e.g., George A. Akerlof, ‘The Market for “Lemons”: Quality Uncertainty and the Market Mechanism’. The Quarterly Journal of Economics, Vol. 84, No. 3, 1970, pp. 488–500. See also Directive (EU) 2019/770 of 20 May 2019 on certain aspects concerning contracts for the supply of digital content and digital services, recital 59.

40. Articles 6–7 UCPD.

41. See e.g. Directive 2011/83/EU of 25 October 2011 on consumer rights, Articles 5–6.

42. Articles 12–14 GDPR.

43. Directive 1999/44/EC of 25 May 1999 on certain aspects of the sale of consumer goods and associated guarantees, Article 2.

44. See e.g. Article 5 of Directive 93/13/EEC of 5 April 1993 on unfair terms in consumer contracts.

45. See Article 7 UCPD (misleading omissions).

46. See also Xavier Gabaix & David Laibson, ‘Shrouded Attributes, Consumer Myopia, and Information Suppression in Competitive Markets’, Quarterly Journal of Economics, 2006, pp. 505–540.

47. See e.g. Claude C. Hopkins, Scientific Advertising (1923); Edward Bernays, Propaganda (Horace Liveright 1955, first published 1928); Donald A. Laird, What makes People Buy (McGraw-Hill 1935); and Vance Packard, The Hidden Persuaders (Ig Publishing 2007, first published 1957).

48. See e.g. Daniel Kahneman, Thinking, Fast and Slow (Farrar, Straus and Giroux 2011).

49. Herbert A. Simon, ‘Rational Choice and the Structure of the Environment’, Psychological Review, 1956.63, pp. 129–138.

50. Roy F. Baumeister & John Tierney, Willpower (Penguin 2011) and Walter Mischel, The Marshmallow Test (Little, Brown 2014).

51. See also George A. Akerlof & Robert J. Shiller, Phishing for Phools (Princeton University Press 2015).

52. Case C‑371/20, Peek & Cloppenburg, ECLI:EU:C:2021:674, paragraph 39. Emphasis added.

53. Eli Pariser, The Filter Bubble (Penguin 2011), p. 121. See also BJ Fogg, Persuasive Technology (Morgan Kaufmann 2003), p. 41.

54. See Frank Pasquale, The Black Box Society (Harvard University Press 2015).

55. See also about the explanation of the logic behind automated decision-making in Section 2 and Article 22 GDPR.

56. Douglas Rushkoff, Coercion (Riverhead 1999), p. 66: The first step in the creation of coercive environments is to excite and disorient.

57. Paco Underhill, Call of the Mall (Simon & Schuster 2004).

58. See also Youyou Wu, Michal Kosinski & David Stillwell, ‘Computer-based personality judgments are more accurate than those made by humans’, PNAS, 2015, pp. 1036–1040.

59. Douglas Rushkoff, Coercion (Riverhead 1999), p. 161; and Marty Neumeier, The Brand Gap (AIGA New Riders 2006), pp. 38–39.

60. See, for instance, Richard H. Thaler & Cass R. Sunstein, Nudge—The Final Edition (Yale University Press 2021, first published 2008), pp. 32–34.

61. Brad

J. Sagarin, Robert B. Cialdini, William E. Rice & Sherman B.

Serna, ‘Dispelling the illusion of invulnerability: the

motivations and mechanisms of resistance to persuasion’, Journal

of Personality and Social Psychology, 2002, pp.

526–541.

62. See, e.g., Mark Wilson, Mark Wilson’s Complete Course in Magic (Ottenheimer 1988, first published 1975), pp. 15 and 85. Mark Wilson (born 1929) majored in advertising and combined his knowledge of advertising and magic with the newest form of communication (television).

63. Douglas Rushkoff, Coercion (Riverhead 1999), p. 257.

64. See also Stephen Weatherill, ‘Empowerment is not the only Fruit’, in Dorota Leczykiewicz & Stephen Weatherill (eds), The Images of the Consumer in EU Law (Hart 2016), pp. 203–221.

65. Richard Craswell, ‘Interpreting Deceptive Advertising’, 65 Boston University Law Review, 1985, pp. 657–732, p. 704.

66. Seth Godin, Tribes (Portfolio 2008) and Joseph Turow, The Aisles Have Eyes (Yale University Press 2017).

67. See also Seth Godin, All Marketers are Liars (Portfolio 2009, first published 2005), p. 138; and Shmuel I. Becher & Sarah Dadush, ‘Relationship as Product: Transacting in the Age of Loneliness’, University of Illinois Law Review, 2021, pp. 1547–1604.

68. BJ Fogg, Persuasive Technology (Morgan Kaufmann 2003), pp. 95–99.

69. See

also Chris Jay Hoofnagle, Ashkan Soltani, Nathaniel Good, Dietrich

J. Wambach & Mika D. Ayenson, ‘Behavioral Advertising: The

Offer You Cannot Refuse’, Harvard Law & Policy Review,

2012, pp. 273–296. For more information about trackers and how

well your browser is protected, visit

<https://

coveryourtracks.eff.org//> at the Electronic

Frontier Foundation.

70. For illustration, the April Fools’ Day (2010) insertion of an ‘immortal soul clause’ to GameStation’s terms (with the possibility to opt-out) was not foreseeable.

71. Case C‑540/08, Mediaprint Zeitungs- und Zeitschriftenverlag, ECLI:EU:C:2010:161, opinion of Advocate General Trstenjak, paragraph 104.

72. Case C‑540/08, Mediaprint Zeitungs- und Zeitschriftenverlag, ECLI:EU:C:2010:660.

73. Case C‑310/15, Deroo-Blanquart, ECLI:EU:C:2016:633, paragraph 34.

74. See e.g. Jan Trzaskowski, ‘Identifying the Commercial Nature of “Influencer Marketing” on the Internet’, Scandinavian Studies in Law, 2018, pp. 81–100. See also the Audiovisual Media Services Directive, Article 19 and recital 81.

75. Barry Schwartz & Kenneth Sharpe, Practical Wisdom (Riverhead 2010), p. 64.

76. Facebook’s ‘Terms of Service’, <https://www.facebook.com/legal/terms/> (visited [from Europe] October 2021).

77. See also O. Seizov, A. J. Wulf & J. Luzak, ‘The Transparent Trap: A Multidisciplinary Perspective on the Design of Transparent Online Disclosures in the EU’, Journal of Consumer Policy, vol. 42(1), 2019, pp. 149–173.

78. See Articles 13(2)(b) and 22. Emphasis added.

79. Case C‑673/17, Planet49, ECLI:EU:C:2019:801, paragraph 74 with explicit reference to point 115 of Advocate General Szpunar’s opinion. Emphasis added.

80. Proposal for a Digital Services Act, 15 December 2020, COM(2020) 825 final, 2020/0361 (COD), Articles 24(1)(c) and 30, and recital 62.

81. Regulation (EU) 2019/1150 on promoting fairness and transparency for business users of online intermediation services, Article 5. See also Commission Notice, Guidelines on ranking transparency pursuant to Regulation (EU) 2019/1150 of the European Parliament and of the Council, 8 December 2020, Official Journal, C 424/1.

82. Directive (EU) 2016/943 on the protection of undisclosed know-how and business information (trade secrets) against their unlawful acquisition, use and disclosure.

83. Helen Nissenbaum, ‘A Contextual Approach to Privacy Online’, Daedalus, 2011, pp. 32–48.

84. See Bart Custers, ‘Click here to consent forever: Expiry dates for informed consent’, Big Data & Society 3(1), 2016. See also Article 9(3) of the proposal for an ePrivacy Regulation, suggesting reminders every 6 months (as long as the processing continues).

85. Tim Wu, The Attention Merchants (Alfred A. Knopf 2017), p. 259.

86. See also Erik K. Clemons, New Patterns of Power and Profit (Springer 2019), p. 235: ‘For some services, like free search, “free” may actually be the most expensive way to provide the service.’

87. Dan Ariely, Predictably Irrational (Harper Perennial 2008), pp. 49 and 54.

88. ‘We fall in love with what we have’, Dan Ariely, Predictably Irrational (Harper Perennial 2008), pp. 133 and 237–238.

89. Point 20 of Annex I (emphasis added). See also Article 5(5).

90. Case C‑371/20, Peek & Cloppenburg, ECLI:EU:C:2021:674, paragraph 42.

91. Case C‑54/17, Wind Tre, ECLI:EU:C:2018:710, paragraphs 46–47 with reference to Case C‑310/15, Deroo-Blanquart, ECLI:EU:C:2016:633, paragraph 40, and Case C‑611/14, Canal Digital Danmark, ECLI:EU:C:2016:800, paragraph 55.

92. See Commission Staff Working Document, pp. 25, 63, 88–89 and 130–131.

93. Recital 31. According to recital 35, the scope does not extend to ‘situations where the consumer, without having concluded a contract with the trader, is exposed to advertisements exclusively in order to gain access to digital content or a digital service.’

94. See also Case C‑487/12, Vueling Airlines, ECLI:EU:C:2014:2232, concerning the relationship between the general rules laid down in the UCPD and Directive 2011/83/EU which is lex specialis concerning transparency in air carriers’ pricing practices.