CHAPTER EIGHT

Manipulation is closely related to agency and the right to self-determination. As mentioned in Chapter 2 (data-driven business models), we use the term ‘manipulation’ for the unlawful influence (‘behaviour modification’) that includes both deception and coercion. Manipulation can be said to steer the choices of others by morally problematic means, including ‘employing emotional vulnerability or character defects’,1 ‘in order to make us act against—or, at the very least, without—our better judgment’.2

In his analysis of manipulation, Cass R. Sunstein similarly finds that ‘an action does not count as manipulative merely because it is an effort to alter people’s behavior’ and that there is ‘a large difference between persuading people and manipulating them’.3 In defining manipulation, Sunstein suggests focusing on whether the effort in question ‘does […] sufficiently engage or appeal to [… the consumer’s] capacity for reflection and deliberation’ (emphasis added), and where ‘the word “sufficiently” leaves a degree of ambiguity and openness, and properly so’.4

This is similar to the fine line that must be drawn between ‘legitimate influence’ and ‘illegal distortion’ of the average consumer’s behaviour under the UCPD. It could also be argued that to be able to objectively ascertain whether consent is given, there must be a sufficient engagement of or appeal to the data subject’s capacity for reflection and deliberation, as discussed above in Chapter 4 (data protection law) in the context of ‘genuine choice’ and the interpretation of the Planet49 case.

Sunstein states that ‘no legal system has a general tort called “exploitation of cognitive biases”’,5 but both the UCPD and the GDPR may be interpreted as including a prohibition of manipulation within the above-mentioned definition, i.e. focusing on the lack of engaging or appealing to the consumer’s capacity for reflection. The UCPD explicitly mentions coercion, and the prohibition of misleading commercial practices could be interpreted to mean the absence of deception.

1. Manipulative commercial practices

Under the UCPD, a commercial practice is aggressive if—in its factual context, taking account of all its features and circumstances—by harassment, coercion, including the use of physical force, or undue influence it is likely to (a) significantly impair the average consumer’s freedom of choice or conduct and (b) cause him to take a transactional decision that he would not have taken otherwise.6

Undue influence exists when a trader exploits ‘a position of power in relation to the consumer’ so as to apply pressure—even without physical force—if this ‘is likely to significantly impair the average consumer’s freedom of choice or conduct’.7 Account must be taken of inter alia (a) ‘its timing, location, nature or persistence’ as well as (b) ‘the use of threatening or abusive language or behaviour’ (emphasis added).8 From its wording, this list is not exhaustive. It follows from recital 16 UCPD that ‘the provisions on aggressive commercial practices should cover those practices which significantly impair the consumer’s freedom of choice’.9

Undue influence is not necessarily ‘impermissible influence’, but that is the case when conducts apply ‘a certain degree of pressure’ and in the factual context actively entail ‘the forced conditioning of the consumer’s will’ in a way that is likely to significantly impair the average consumer’s freedom of choice or conduct.10 The CJEU has established that it does not constitute an aggressive commercial practice to ask the consumer to take his final transactional decision without having time to study, at his convenience, the documents delivered to him by a courier if the consumer has been in a position to take cognisance of the standard-form contracts before.11

However, the CJEU found that certain additional practices with the aim of limiting the consumer’s freedom of choice may lead to the commercial practice being regarded as aggressive. This includes conducts that ‘put pressure on the consumer such that his freedom of choice is significantly impaired’ or establish an attitude that is ‘liable to make that consumer feel uncomfortable’ such as to ‘confuse his thinking in relation to the transactional decision to be taken’.12

Such pressure may, for instance, be induced by announcing that less favourable conditions are a consequence of delayed action on the part of the consumer,13 a means of utilising Cialdini’s scarcity lever of influence, as discussed in Chapter 6 (human decision-making). Information may also constitute an important part of manipulative commercial practices, and such practices may thus fall under both aggressive and misleading commercial practices under the UCPD.

As discussed in Chapter 7 (persuasive technology), the design of human–computer interaction plays a significant role in how consumers are influenced in the context of data-driven business models.14 Technology can be automated to both observe and shape our behaviour at scale.15 Some people prefer to use the term ‘dark patterns’ for unfair practices used in computer design to trick people into certain behaviours.16 Here, we use ‘choice architecture’ as a neutral term, recognising that the choice architecture may be designed to manipulate users.

The CJEU has assumed that the average consumer is generally aware of how advertising and sales promotions work in a free market economy, and that today’s consumers are ‘much more circumspect and informed’ as a result of their experiences with marketing; and also that the protection of consumers is a ‘patronising argument’, which is ‘no longer convincing’.17 However, it is not impossible that pervasive exposure to personalised marketing, including the use of prompts and the manipulation of emotions, based on online tracking across platforms coupled with (annoying) cookie-consent boxes, has resulted in additional confusion, cognitive overload and apathy rather than real education.18 As expressed by James Williams:

‘So the main risk information abundance poses is not that one’s attention will be occupied or used up by information, as though it were some finite, quantifiable resource, but rather that one will lose control over one’s attentional processes.’19

Similarly, Tim Wu:

‘We are at risk, without quite fully realizing it, of living lives that are less our own than we imagine.’20

1.1. The average consumer online

As mentioned in Chapter 5 (marketing law), an average consumer must be constructed from the audience to which the marketing is exposed or directed. When personalisation (individuals) and segmentation (groups) are in use, it could be argued that the group of people from which the average is constructed should be narrowed accordingly. As mentioned, the UCPD also applies in cases where the commercial practice only applies to one person.21

The low cost of communication, including by establishing a website or social media presence, sending an e-mail, or creating an app, makes it economically viable to target large audiences in order to profit from only a small group of consumers in the long tail. In that vein it would make sense to consider whether a particular business model is designed to take undue advantage of certain (vulnerable) consumers.22 This will have an impact on how the group of consumers from which the average consumer must be constructed is to be defined.

The situation can be illustrated by so-called ‘subscription traps’, where consumers are given an extraordinarily good offer, e.g. a free smartphone. The consumer must provide payment details to pay for shipping, but the fine print reveals a subscription to a (worthless) service. The trader has been careful in his design to ensure that consumers do not read the information about the subscription.

Say that 1,000,000 consumers are exposed to the offer, 5,000 react and 500 end up accepting the deal. Let us assume that all of the consumers are surprised by the subscription and 450 of them decide to return the phone and settle with the trader by paying €100 in fear of legal consequences, and the remaining 50 consumers successfully challenge the validity of the contract. It should be obvious that it is not trivial which group the average consumer is constructed from.

The issue seem more to be a matter of professional diligence (aggressive practice) but as the requirements are cumulative, the average consumer cannot be ignored.

The question of how to construct the average consumer in the context of personalisation and segmentation is not settled in law. The same is the case for understanding consent and information under the GDPR in light of an average user. For the GDPR, it would be a practical solution to apply some sort of average user, but—at least in principle—the envisaged rights and protections appear more personal under the GDPR than the UCPD.

1.2. We are all vulnerable

Data-driven marketing may be delivered with surgical precision at an industrial scale, which allow for identifying (a) vulnerable individuals/groups and/or (b) particular vulnerable aspects in all of us, individually.23 It is not difficult to, for instance, market payday loans to people who are (likely to be) interested in gambling. Targeted advertising is profitable24 and exploitable by means of automation in a free-market system.25 If the group from which the average user is constructed is too broad, collateral damage is likely to be high.

A level of collateral damage must be accepted in marketing law, i.e. the distortion of some consumers’ economic behaviour is foreseen, because consumers come in many flavours.26 When marketing is personalised, the argument for collateral damage weakens, however, as the need for an abstraction disappears with concrete identification.

2. Lawful processing of personal data

Both marketing and personalisation of products are legitimate purposes for the processing of personal data. However, what is not settled is the extent to which proportionality allows for personalisation and segmentation, including, in particular, when it is used for manipulation.27 It follows from Article 5(1)(a) that processing must be both lawful and fair. With regard to legitimate basis for processing, consent is required for personalisation that ‘is not objectively necessary for the purpose of the underlying contract, for example where personalised content delivery is intended to increase user engagement with a service’.28

2.1. Data minimisation and storage limitation

Personal data must be limited to what is necessary in relation to the purposes for which they are processed (‘data minimisation’) and not kept longer than is necessary for the purposes for which the personal data are processed (Article 5(1)(c) and (e), respectively). It is not settled in case law from the CJEU how much information—for the purposes of marketing—is necessary (data minimisation) and for how long it is necessary to store such data (storage limitation).

These principles are particularly relevant in the context of data-driven marketing that identifies correlations beyond the capacity of human imagination, including by analysing data over time. In that vein, it could be argued that any data—in principle—could be relevant for marketing purposes. Any behaviour, including e.g. typing speed, mouse hovering, phrases used in personal e-mails etc., could provide insights that could eventually prove to be important for identifying traits that are relevant for exposure to marketing.

These principles (as well as other principles) should also be understood in the context of ‘data protection by design and by default’ (Article 25). According to this provision, the trader must—with a view to compliance with the GDPR—integrate necessary safeguards into the processing and implement appropriate technical and organisational measures, including those that will ensure that, ‘by default, only personal data which are necessary for each specific purpose of the processing are processed’ (emphasis added).

Without drawing a particular line, it is safe to assume that these principles set a threshold for what data the trader can use for marketing purposes, regardless of the legitimate basis, including consent, on which the trader relies. The EDPB and the EDPS consider that the use of AI to infer emotions of a natural person is highly undesirable and should be prohibited.29

2.2. Automated individual decision-making, including profiling

As discussed in Chapter 4 (data protection law), the right ‘not to be subject to a decision based solely on automated processing, including profiling’, may limit the use of personal data for marketing purposes, provided the processing produces legal effects or similarly significant effects on the data subject. This issue is not settled in case law, but we find it reasonable to assume that personalised marketing—at least to some extent—amounts to automated individual decision-making, including profiling that has legal or similar effects.

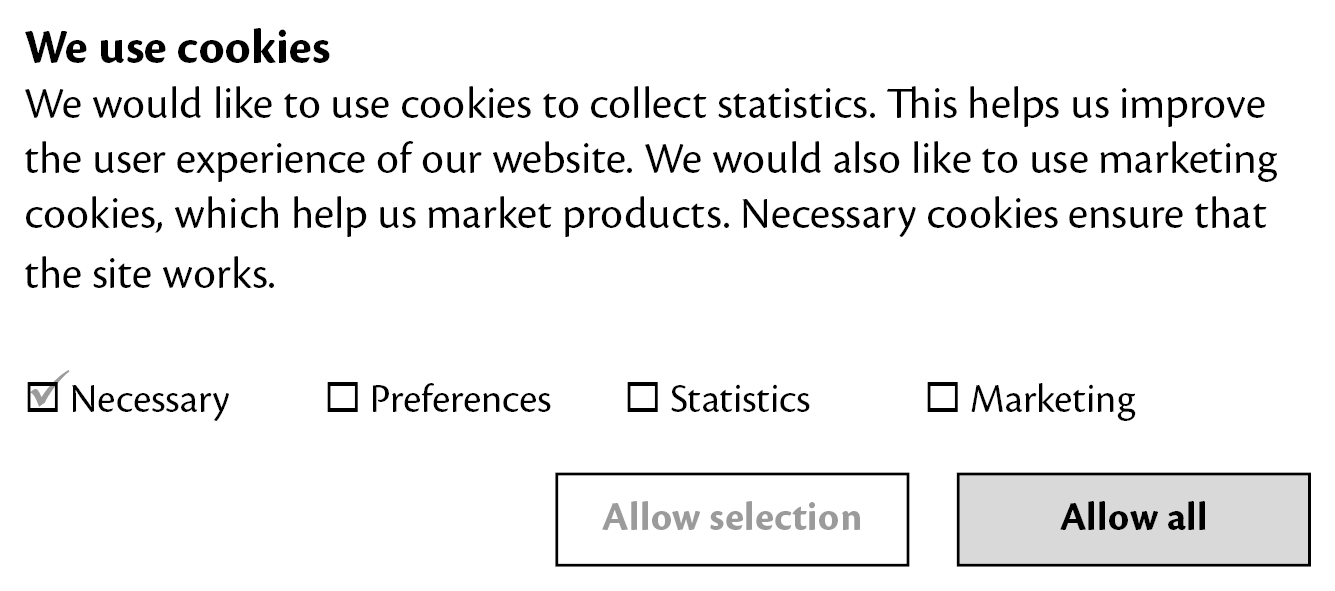

2.3. Pop-Ups

One widespread and illustrative use-case of persuasive technology in data-driven business models is found in the context of cookie consent pop-ups. Article 5(3) of the ePrivacy Directive requires neither consent nor a ‘pop-up’ to inform the user about cookies that are ‘strictly necessary’ for carrying out the transmission. In most—if not all—cases, the cookie consent pop-up is a prompt designed to obtain consent for cookies that are not necessary for the transmission. Thus, it could be argued that this prompt in itself could be ‘unnecessarily disruptive to the use of the service’, as mentioned in recital 32 GDPR, and may constitute an aggressive commercial practice under the UCPD.

Pop-up dialogue boxes are widely used as prompts in human–computer interaction as a warning that requires the user to confirm an action (‘delete file?’),30 so even though these prompts are intended as warnings (concerning privacy), we may be conditioned to perceive them as requests for confirmation.31 This is further corroborated by the widespread use of cookie consent pop-ups that may instil a ‘click-fatigue’; in particular when their design varies from one website to another, and the prompt is only persistent until the user has performed the task desired by the trader. It follows from GDPR Article 7(3) that ‘it shall be as easy to withdraw as to give consent’. From a ‘friction’-perspective, this could mean that the user should be similarly prompted at every visit after consent is given, and that the dialogue box should make it equally easy to reject cookies.

The consent pop-up may be carefully designed to animate a particular behaviour by increasing motivation and/or ability (friction). Motivation can be increased by the wording/‘framing’ coupled with relevant storytelling, as discussed in Chapter 9 (transparency).

Friction can be dispensed by the availability of options/buttons as well as by such elements as their (relative) placement, sizing and colouring. ‘Consent’ is normally given in a fraction of a moment by a user who is eager to access the content to which the pop-up is a barrier. A choice between ‘OK’ and ‘later’/‘manage preferences’ makes it more likely that the average user will ‘consent’, since it is the most frictionless option. In contrast to computer prompts, the dialogue box can seldom be closed by hitting the ‘escape key’. Occasionally, no alternative to ‘OK’ is offered.

Figure

8.1. Is it ‘possible in practice to ascertain

objectively’ whether consent is given? (cf. the Planet49 case). The

issue here is not whether boxes are pre-checked, but the placement

and design of the ‘confirmation’-button.

The requirements of data protection by design and by default must at least entail equal friction to consent and not consent; and possibly more friction to consent, to avoid it being ‘consent by default’.32 Given the intrusive consequences of tracking-cookies and the fundamental rights being protected, it seems insufficient that the user is merely left with a guide on how to remove cookies in the browser settings.

Eventually, the courts must—considering the requirements of professional diligence and data protection by design and by default—determine whether the choice-architecture is designed to sufficiently engage—and appeal to—the user’s capacity for reflection and deliberation, as discussed above, as well as whether it is possible, in practice, to ascertain objectively whether it is a clear affirmative action that signifies agreement to the processing.

One could add that to the extent tracking is assumed to be in accordance with the users’ goals, values and preferences, the trader should have no problem with adding a bit of friction by, for instance, replacing the cookie consent pop-up with an unobstructive ‘please track me!’-button, knowing that people hate waiting but don’t mind clicking.33

3. Subliminal marketing

Subliminal marketing covers techniques that are designed to engage people subconsciously. ‘Subliminal’ is derived from sub (‘below’) and limen (‘threshold’).34 Subliminal messages are visual or auditory stimuli that are not perceived by the conscious mind.35 Article 9(1)(b) of the Audiovisual Media Services Directive36 explicitly prohibits, per se, the use of subliminal techniques in audiovisual commercial communications, including television.

The concept of subliminal techniques has resurfaced in Article 5 of the proposal for an Artificial Intelligence Act. The proposed regulation aims to prohibit a number of particularly harmful AI practices, including:

‘(a) the placing on the market, putting into service or use of an AI system that deploys subliminal techniques beyond a person’s consciousness in order to materially distort a person’s behaviour in a manner that causes or is likely to cause that person or another person physical or psychological harm.’

The focus on ‘subliminal techniques’ is interesting as (1) many aspects of data-driven business models remain beyond the user’s consciousness and (2) these techniques are generally recognised as unlawful per se, and thus are likely to constitute an aggressive practice under the UCPD. According to the explanatory memorandum to the proposed regulation, the focus should be on practices that have a ‘significant potential to manipulate persons through subliminal techniques beyond their consciousness’.37

It is relevant to note that the proposed Artificial Intelligence Act focuses on physical and psychological harm but not economic harm as regulated in the UCPD. Also for subliminal marketing, it remains an open question whether Member States can regulate it—as a matter of taste and decency—to prevent, for instance, psychological harm as we discuss in Chapter 10 (human dignity and democracy).

4. Attention, engagement and addiction

Companies that rely on re-selling attention may improve their revenue by (1) encouraging engagement to increase the amount of attention and/or (2) using personal data (or other information) to increase the value of attention. The re-selling of attention or encouragement of engagement are not in themselves aggressive commercial practices.

As attention is the real value, traders may be incentivised to become increasingly aggressive in their efforts to attract and maintain the attention of users, especially when their tactics are opaque to the user. With inspiration from behavioural sciences, traders have tools, including ‘gamification’ techniques such as offering the chance to collect points/benefits, that can create addictions38 and dependencies which may not only challenge the user’s autonomy but also have real societal implications, as we discuss in Chapter 10 (human dignity and democracy).

For Facebook, ‘likes’ and the ‘newsfeed’ condition addictive behaviour in ways which are comparable to slot machines, drugs and other addictive technologies that are heavily regulated. If you are not convinced, consider how often, and, in particular, when and why, your friends pay attention to their smartphones.

Auto-Play is another pervasive feature that can remove friction by not engaging or appealing to the user’s capacity for reflection and deliberation. Video and/or audio elements automatically start playing after some triggering event such as page load, approaching the end of a series or when navigating to a particular region of the webpage.39 It may seem harmless (even desirable) that, for instance, the subsequent episode of a television series starts automatically. However, considering the efficiency of increasing (profitable) attention, it could be argued that it is an aggressive commercial practice to not prompt the user to reflect.40 The same arguments can be made against subscriptions where automatic (prompt-less) renewal is the default.

Traders’ access to data on behaviour allows them to both gauge and manufacture engagement. Attention may be increased and conditioned by creating conflict, outrage and social exclusion in social media services. Societal impact may be not the intention, but rather a consequence of optimising algorithms for attention and engagement.

4.1. Exploitation

Allen W. Wood mentions exploitation, which overlaps with coercion and manipulation, as objections that can be raised in the context of advertising, and he observes that exploitation includes ‘making use of the vulnerabilities of others for your ends’.41 Article 5 of the above-mentioned proposal for an Artificial Intelligence Act also aims to prohibit certain exploitations of vulnerabilities, i.e.:

‘(b) the placing on the market, putting into service or use of an AI system that exploits any of the vulnerabilities of a specific group of persons due to their age, physical or mental disability, in order to materially distort the behaviour of a person pertaining to that group in a manner that causes or is likely to cause that person or another person physical or psychological harm.’

Addictive and persuasive design/technology may be more a matter of human well-being and product safety42 than marketing law and data protection law. However, the awareness of its existence and the implications thereof may be important in holistic assessments of the legality of data-driven business models, including the extent to which the use of personal data is appropriate, considering the requirements of legitimacy and accountability. The same goes for commercial practices, where ‘full account should be taken of the context of the individual case’ (recital 7 UCPD).

Even though the proposed Artificial Intelligence Act aims to protect physical or psychological harm, exploitation of vulnerabilities, like subliminal marketing, has been identified as a particularly harmful AI practice, which could have a spill-over effect to influence the interpretation of aggressive commercial practices in the UCPD.

5. Just add friction

The use of manipulation may render a commercial practice unfair and mean that consent is not obtained, which may lead to unlawful processing of personal data. However, friction, including prompts, may also be used to engage or appeal to the consumer’s capacity for reflection and deliberation. This engages mental effort involved in System 2-thinking, which activates ‘more vigorous and more analytical brain machinery’.43

By adding friction, users’ revealed preferences may become more aligned with their real preferences. However, friction may also add to ego depletion and decisions fatigue, as discussed in Chapter 6 (human decision-making). Thus, a balance must be found, as ‘nobody has the time or cognitive resources to be completely thorough and accurate with every decision’.44 This leads us to revisit freedom, as in agency and self-determination, as introduced in Chapter 6 (human decision-making), and interferences with the consumer’s ‘free will’, including paternalism and nudging.

5.1. Paternalism and the right to self-determination

The principle of free choice (agency) is important in both data protection law and marketing law, as well as being a fundamental principle that underpins the economic rationale behind markets. From a philosophical perspective, paternalism can be defined as ‘the interference of a state or an individual with another person, against their will’.45 Given the powers of surveillance and behaviour modification at traders’ disposal, it may also make sense to emphasise that traders may interfere with citizens’ right to self-determination. It has been argued that ‘treating the design of digital technologies [is] the ground of first struggle for our freedom and self-determination’.46

The idea of personal autonomy is that individuals are ‘self-governing agents’ with the capacity to ‘decide for oneself and pursue a course of action in one’s life, often regardless of any particular moral content’.47 However, autonomy and free choice are not the important part of this equation, given our prior assumption that people have agency. It is more important to discuss what interferences should be accepted, especially given the bounded nature of human rationality that we have also assumed.

In economics, paternalism and its effect on the consumer’s right to self-determination are often used in a narrow sense, including only state interference. This perspective on paternalism is often linked to an ideology in which it is unnecessary and even immoral to protect people against their choices.48 The presumption that individual choices should be respected is usually based on the claim that people do an excellent job of making choices.49 Similarly, Nobel laureates George A. Akerlof & Robert J. Shiller have noted that the storytelling related to the ideas that ‘free-market economies, subject to the caveats of income distribution and externalities, yield the best of all possible worlds’ if everyone is ‘free to choose’ is ‘derived from an unsophisticated interpretation of standard economics’.50 As described by Barry Schwartz in an abstract for an article:

‘Americans now live in a time and a place in which freedom and autonomy are valued above all else and in which expanded opportunities for self-determination are regarded as a sign of the psychological well-being of individuals and the moral well-being of the culture. This article argues that freedom, autonomy, and self-determination can become excessive, and that when that happens, freedom can be experienced as a kind of tyranny. The article further argues that unduly influenced by the ideology of economics and rational-choice theory, modern American society has created an excess of freedom, with resulting increases in people’s dissatisfaction with their lives and in clinical depression. One significant task for a future psychology of optimal functioning is to deemphasize individual freedom and to determine which cultural constraints are necessary for people to live meaningful and satisfying lives.’51

Despite their intrinsic relationship, it may be fair to say that ‘the right to self-determination’ is more important than ‘the absence of paternalism’, in whatever shape or form, and that ‘the absence of manipulation’ is a prerequisite for ‘meaningful self-determination’. As discussed in Chapter 4 (data protection law) in the context of consent, freedom of choice may be challenged by the trader’s market power, including network effects, and the user’s interest in/dependence on their services. If, for instance, a particular school (or the majority of a class) decides to use a Facebook competitor for school-related interactions, the choice may be less free, as there is significant friction to not choosing this default. It may also be an interference with the right to self-determination if consent cannot be withdrawn without insignificant detriment.

On this backdrop, it may make sense to perceive the principles of both self-determination and paternalism in a market context as a protection from unwanted interference from both state and traders with a view to preserve personal autonomy of the consumer. The word ‘unwanted’ is intended to introduce the same ‘degree of ambiguity and openness’ as the word ‘sufficiently’ in Cass R. Sunstein’s above-mentioned definition of manipulation.

Freedom depends on the number and importance of the things that one (a) is free to do and (b) autonomously wants to do.52 Freedom might thus be said to describe not only the size of our ‘option set’ but also our awareness of what options there are.53 In that sense, the right to self-determination depends not only on freedom (to choose/consent) but also on the ability to create some sense of transparency, as discussed in Chapter 9 (transparency).

If transparency cannot be established, there may be a good argument for state intervention in the guise of regulation. In the context of privacy, it has been argued that ‘consumer privacy interventions can enable choice, while the alternative, pure marketplace approaches can deny consumers opportunities to exercise autonomy’.54 Insights from behavioural sciences, including persuasive technology, may be used to reconsider the idea of empowered consumers55 as an argument for uncontested belief in the right to self-determination and a fear of paternalism.56 As noted in the 1960s by British legal philosopher Herbert L.A. Hart,

‘paternalism—as a tool for protecting individuals against themselves—is a perfectly coherent policy’, later noting that ‘choices may be made or consent may be given without adequate reflection or appreciation of the consequences; or in pursuit of merely transitory desires; or in various predicaments when the judgment is likely to be clouded; or under inner psychological compulsion; or under pressure by others of a kind too subtle to be susceptible of proof in a law court.’57

An argument against state intervention is that consumers are the best qualified to determine their preference, which we challenged in Chapter 6 (human decision-making). The state may have the best intentions, but it does not know what our individual preferences are. Conversely, while traders may have much better insights into individual preferences than consumers have themselves, their intention may not be aligned with the best interests of individual consumers.

A desired level of paternalism is a political choice taking into account both economic efficiency and social welfare, as we discuss in Chapter 10 (human dignity and democracy). It seems as though paternalism—i.e., accepting state interference and limiting commercial interference—is less controversial throughout the European Union than it is in the U.S., where trust in markets seems to be higher than in state government.

5.2. Nudging and defaults

Nudging58 is a means of paternalism—originally framed as ‘libertarian paternalism’59—that applies findings within behavioural sciences to improve choice architecture. Nudging suggests that choice architecture should be designed so that people are ‘nudged’ in a particular (default) direction, while they remain free to choose other options. As expressed by Richard H. Thaler and Cass R. Sunstein:

‘A nudge, as we will use the term, is any aspect of the choice architecture that alters people’s behavior in a predictable way without forbidding any options or significantly changing their economic incentives. To count as a mere nudge, the intervention must be easy and cheap to avoid. Nudges are not taxes, fines, subsidies, bans, or mandates.’

‘The paternalistic aspect lies in the claim that it is legitimate for choice architects to try to influence people’s behavior in order to make their lives longer, healthier, and better. In other words, we argue for self-conscious efforts, by institutions in the private sector and by government, to steer people’s choices in directions that will improve their lives.’60

The goal (for governments) should be to ensure that ‘if people do nothing at all, things will go well for them’, including by establishing ‘default rules that serve people’s interests even if they do nothing at all’.61 Here, we argue that the same standard could be used as inspiration for determining whether a commercial practice or request for consent is preserving the consumer’s right to self-determination.62

Defaults are powerful nudges that are explicitly regulated in Article 25 GDPR (data protection by default), which may also spill over to the interpretation of the UCPD. It is, for instance, found that around 96% of iOS 14.5-user have decided to rely on ‘restricted’ App Tracking63 in Apple’s AppTrackingTransparency feature, mentioned in Chapter 2 (data-driven business models). This could be seen as clear indication of an obvious default for nudging, which, by the way, is well-alligned with the requirement of data protection by design and by default.

To illustrate the value of default, Google is estimated to pay $8–12 billion to Apple every year for making ‘Google’s search engine the default for Safari’ and using ‘Google for Siri and Spotlight in response to general search queries’.64

5.3. Accountability and ethics

As mentioned in Chapter 4 (data protection law), accountability is an important principle in the GDPR that, in particular, ensures that authorities can gain insight into traders’ compliance. Similarly, the above-mentioned proposal for an Artificial Intelligence Act suggests requirements concerning technical documentation for providers of ‘high-risk AI’.65 In its guidelines for AI, the Council of Europe suggests that ‘AI applications should allow meaningful control by data subjects over the data processing and related effects on individuals and on society’.66

Although there is not a similar requirement in the UCPD, transparency towards users may serve accountability purposes under both data protection law and marketing law, as we discuss in the following chapter. Ultimately, the determination of unwanted behaviour modification is a normative question, in consideration of which ethics is likely to play a role. BJ Fogg offered (in 2003) the following ethical standard for the use of persuasive technology:

‘To act ethically the creators should carefully anticipate how their product might be used for an unplanned persuasive end, how it might be overused or how it might be adopted by unintended users. Even if the unintended outcomes are not readily predictable once the creators become aware of harmful outcomes, they should take action to mitigate them.’67

1. Allen W. Wood, The Free Development of Each (Oxford University Press 2014), chapter 12.

2. Douglas Rushkoff, Coercion (Riverhead 1999), p. 270.

3. Cass R. Sunstein, ‘Fifty Shades of Manipulation’, Journal of Marketing Behavior, 2016, pp. 213–244, p. 215.

4. Ibid., pp. 215–216.

5. Ibid., p. 234.

6. Article 8 UCPD.

7. Articles 8 and 2(1)(j) UCPD.

8. Article 9 UCPD.

9. See also recitals 6 and 14 as well as COM/2003/0356 final, COD 2003/0134, paragraphs 54, 57 and 71.

10. Case C‑628/17, Orange Polska, ECLI:EU:C:2019:480, paragraphs 33–34.

11. Case C‑628/17, Orange Polska, ECLI:EU:C:2019:480, paragraph 45.

12. Case C‑628/17, Orange Polska, ECLI:EU:C:2019:480, paragraphs 33, 46–47.

13. Case C‑628/17, Orange Polska, ECLI:EU:C:2019:480, paragraph 48.

14. See also Robert M Bond et al., ‘A 61-million-person experiment in social influence and political mobilization’, Nature 489, 2012, pp. 295–298; Eliza Mik, ‘The erosion of autonomy in online consumer transactions’, Law, Innovation and Technology, 2016, pp. 1–38; and Ryan Calo, ‘Digital Market Manipulation’, George Washington Law Review, 2014, pp. 995–1051.

15. Shoshana Zuboff, Surveillance Capitalism (Profile Books 2019), p. 8.

16. See <https://www.darkpatterns.org/types-of-dark-pattern>.

17. Opinion of Advocate General Trstenjak in Case C‑540/08, Mediaprint Zeitungs- und Zeitschriftenverlag, ECLI:EU:C:2010:161, paragraph 104 and footnote 82 with reference.

18. See also Forbrukerrådet, ‘Deceived by design’, report 27 June 2018, <https://www.forbrukerradet.no/undersokelse/no-undersokelsekategori/deceived-by-design/>.

19. James Williams, Stand Out of Our Light (Cambridge University Press 2018), p. 15.

20. Tim Wu, The Attention Merchants (Alfred A. Knopf 2017), p. 7.

21. Case C‑388/13, UPC Magyarország, ECLI:EU:C:2015:225, paragraph 42.

22. See also OFT v Purely Creative [2011] EWHC 106 (Ch), paragraph 106.

23. See also about ‘digital vulnerability’ Natali Helberger, Orla Lynskey, Hans-W. Micklitz, Peter Rott, Marijn Sax & Joanna Strycharz, EU Consumer Protection 2.0 (BEUC 2021).

24. Cathy O’Neil, Weapons of Math Destruction (Crown 2016), p. 72.

25. George A. Akerlof & Robert J. Shiller, Phishing for Phools (Princeton University Press 2015), p. 3.

26. For discussion of various consumer images, see Marco Dani, ‘Assembling the Fractured European Consumer’ (2011) European Law Review 36, pp. 362–384, pp. 380–381. See also Nassim Nicholas Taleb, Antifragile (Random House 2012), p. 295: ‘The notion of “average” may have little significance when one is susceptible to variations.’

27. See also EDPB, ‘Guidelines 8/2020 on the targeting of social media users (version 2.0)’, paragraph 12.

28. EDPB,

‘Guidelines 2/2019 on the processing of personal data under

Article

6(1)(b) GDPR in the context of the provision of online

services to data subjects’, paragraph 57.

29. EDPB-EDPS Joint Opinion 5/2021 of 18 June 2021 on the proposal for a Regulation of the European Parliament and of the Council laying down harmonised rules on artificial intelligence (Artificial Intelligence Act).

30. For a similar reasoning, see Advocate General Szpunar’s opinion in Case C‑673/17, Planet49, ECLI:EU:C:2019:246, paragraph 89.

31. See in general about cookie-consent Ronald Leenes & Eleni Kosta, ‘Taming the cookie monster with Dutch law: A tale of regulatory failure’, Computer Law & Security Review, 2015, pp. 317–335.

32. See also the Italian Competition Authority (ICA), ‘Facebook fined 10 million Euros by the ICA for unfair commercial practices for using its subscribers’ data for commercial purposes’, press release, 7 December 2018. It was found to be an aggressive commercial practice to impose significant restrictions on the use of the services for users that did not consent to data sharing.

33. Marty Neumerier, The Brand Gap (Expanded Edition, AIGA 2006), p. 99.

34. Subliminal can also be linked to the sublime—as in outstanding and transcendent excellence, see Tor Nørretranders, The User Illusion (Penguin 1999). Translation (by Jonathan Sydenham) of Tor Nørretranders, Mærk Verden (Gyldendal 1991).

35. See also Alan F. Westin, Privacy and Freedom (Atheneum 1967), p. 279; Sarah E. Igo, The Known Citizen (Harvard 2018), p. 128; and Shoshana Zuboff, Surveillance Capitalism (Profile Books 2019), p. 302.

36. Directive 2010/13/EU of 10 March 2010 concerning the provision of audiovisual media services.

37. Proposal for an Artificial Intelligence Act, section 5.2.2.

38. See, e.g., Natasha Dow Schüll, Addiction by Design (Princeton 2012).

39. <https://en.wikipedia.org/wiki/Auto-Play> (visited February 2021).

40. See, similarly, Annex I, point 29, of the UCPD, which prohibits ‘inertia selling’ (demanding payment for products not solicited by the consumer); and paragraphs 42–43 of Case C‑54/17, Wind Tre, ECLI:EU:C:2018:710, concerning SIM cards containing pre-activated services.

41. Allen W. Wood, The Free Development of Each: Studies on Freedom, Right, and Ethics in Classical German Philosophy (Oxford University Press 2014), chapter 12.

42. See, e.g., proposal for an Artificial Intelligence Act and European Commission, ‘Report on the safety and liability implications of Artificial Intelligence, the Internet of Things and robotics’, 19 February 2020, COM(2020) 64 final.

43. Nassim Nicholas Taleb, Antifragile (Random House 2012), p. 44.

44. Barry Schwartz, The Paradox of Choice (Ecco 2016, first published 2004), p. 76.

45. Gerald

Dworkin, ‘Paternalism’, Stanford Encyclopedia of Philosophy,

<https://

plato.stanford.edu/entries/paternalism/>

(visited February 2020).

46. James Williams, Stand Out of Our Light (Cambridge University Press 2018), p. 95.

47. Sarah Buss & Andrea Westlund, ‘Personal Autonomy’, Stanford Encyclopedia of Philosophy, <https://plato.stanford.edu/entries/personal-autonomy/> (visited February 2020); and Jane Dryden, ‘Autonomy’, The Internet Encyclopedia of Philosophy, <https://www.iep.utm.edu/autonomy/> (visited February 2020).

48. Daniel Kahneman, Thinking, Fast and Slow (Farrar, Straus and Giroux 2011), p. 411.

49. Cass R. Sunstein & Richard H. Tahler, ‘Libertarian Paternalism Is Not an Oxymoron’, 70 University of Chicago Law Review, 2003, pp. 1159–1202, p. 1167; and similarly in Richard H. Thaler & Cass R. Sunstein, Nudge—The Final Edition (Yale University Press 2021, first published 2008), p. 9.

50. George A. Akerlof & Robert J. Shiller, Phishing for Phools (Princeton University Press 2015), p. 150. See also Kate Raworth, Doughnut Economics (Chelsea Green Publishing 2017) and Ha-Joon Chang, 23 Things They Don’t Tell You about Capitalism (Penguin 2010).

51. Barry Schwartz, ‘Self-Determination: The Tyranny of Freedom’, American Psychologist, 2000, pp. 79–88.

52. Jon Elster, Sour Grapes (Cambridge University Press 2016, first published 1983), pp. 129 and 131.

53. Tim Wu, The Attention Merchants (Alfred A. Knopf 2017), p. 119.

54. Chris Jay Hoofnagle, Ashkan Soltani, Nathaniel Good, Dietrich J. Wambach & Mika D. Ayenson, ‘Behavioral Advertising: The Offer You Cannot Refuse’, Harvard Law & Policy Review, 2012, pp. 273–296.

55. See in general about the consumer’s role in Hans-W. Micklitz, ‘The Consumer: Marketised, Fragmentised, Constitutionalised’, in Dorota Leczykiewicz & Stephen Weatherill (eds), The Images of the Consumer in EU Law (Hart 2016), pp. 21–41. See also Fabrizio Esposito, ‘A Dismal Reality: Behavioural Analysis and Consumer Policy’, Journal of Consumer Policy, 2017, pp. 1–24, arguing that a more intrusive consumer policy has become easier to justify.

56. See about possible limitations in paternalism in Anne van Aaken, ‘Judge the Nudge: In Search of the Legal Limits of Paternalistic Nudging in the EU’, in Alberto Alemanno & Anne-Lise Sibony (eds), Nudge and the Law (Hart 2015), pp. 83–112.

57. Herbert L.A. Hart, Law, Liberty, and Morality (Stanford University Press 1963), pp. 31–33.

58. See in general Richard H. Thaler & Cass R. Sunstein, Nudge—The Final Edition (Yale University Press 2021, first published 2008) and Alberto Alemanno & Anne-Lise Sibony (eds), Nudge and the Law (Hart 2015).

59. Cass R. Sunstein & Richard H. Tahler, ‘Libertarian Paternalism Is Not an Oxymoron’, 70 University of Chicago Law Review, 2003, pp. 1159–1202.

60. Richard H. Thaler & Cass R. Sunstein, Nudge—The Final Edition (Yale University Press 2021, first published 2008), pp. 8 and 7, respectively.

61. Cass R. Sunstein, Simpler (Simon & Schuster 2013), pp. 100–102.

62. See also Anne van Aaken, ‘Judge the Nudge: In Search of the Legal Limits of Paternalistic Nudging in the EU’, in Alberto Alemanno & Anne-Lise Sibony (eds), Nudge and the Law (Hart 2015), pp. 83–112, pp. 90 and 93.

63. Estelle Laziuk, ‘iOS 14 Opt-in Rate—Weekly Updates Since Launch’, 25 May 2021 (updated 6 September 2021), <https://www.flurry.com/blog/ios-14-5-opt-in-rate-idfa-app-tracking-transparency-weekly/> (visited October 2021).

64. U.S. Department of Justice, ‘Justice Department Sues Monopolist Google For Violating Antitrust Laws’, press release of 20 October 2020. See p. 37 of the antitrust complaint, <https://s3.documentcloud.org/documents/7273448/DOC.pdf>.

65. See also Philipp Hacker, ‘Teaching fairness to artificial intelligence: Existing and novel strategies against algorithmic discrimination under EU law’, Common Market Law Review, 2018, pp. 1143–1185.

66. Council of Europe, ‘Guidelines on Artificial Intelligence and Data Protection’, 25 January 2019, II(12).

67. BJ Fogg, Persuasive Technology (Morgan Kaufmann 2003), p. 229.